Production path

Thanks to its multiple features, Istio can help you enhance your delivery process. Throughout this section, you will deploy a second version of the Middleware component without downtime or unwanted side effects on your production traffic.

Remember to use ‘--namespace workshop’ or ‘-n workshop’ for all your kubectl commands to target the correct namespace.

|

Mirroring

Description

One way of testing a new version is to deploy it next to the previous one and perform traffic mirroring. As such, one can see the new version’s behaviour with production traffic without impacting end users.

+--+

+------------+ +-----------------+ +------------+

# | +---->+ +------> |

~+~ +---+ Front | | Middleware v1 | | Database |

/ \ | +<----+ <------+ |

+-------+----+ +-----------------+ +------+-----+

+--+ |

| +-----------------+

| | |

+--------->+ Middleware v2 |

Fire and forget | |

+-----------------+

In this case, the network traffic between Front and Middleware v2 is in "fire and forget" mode. So the Front

doesn’t even know it is communicating to two middleware services at a time.

|

Execution

Let’s start by deploying a new instance of the Middleware layer:

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: workshop

labels:

app: middleware

version: v2

name: middleware-v2

spec:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

selector:

matchLabels:

app: middleware

version: v2

template:

metadata:

labels:

app: middleware

version: v2

spec:

containers:

- image: stacklabs/istio-on-gke-middleware

imagePullPolicy: IfNotPresent

env:

- name: MIDDLEWARE_ERROR_RATE (1)

value: "50"

- name: MIDDLEWARE_VERSION

value: "errors-50%"

- name: MIDDLEWARE_DATABASE_URI

value: http://database:8080

- name: SPRING_CLOUD_GCP_LOGGING_PROJECT_ID

value: "<YOUR_GCP_PROJECT_ID>" (2)

livenessProbe:

httpGet:

path: /actuator/health

port: 8181

initialDelaySeconds: 20

name: middleware

resources:

requests:

memory: "512Mi"

cpu: 1

limits:

memory: "512Mi"

cpu: 1

ports:

- containerPort: 8080

name: http

protocol: TCP

---

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

namespace: workshop

name: middleware

spec:

hosts:

- middleware

http:

- route:

- destination:

host: middleware

subset: v1

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

namespace: workshop

name: middleware

spec:

host: middleware

subsets:

- name: v1

labels:

version: v1| 1 | This environment variable configures the component to return errors randomly at a given rate |

| 2 | Your Google Cloud Project ID |

To do so, let’s run the following command:

Λ\: $ kubectl apply --filename 04_production-path/01_mirroring/01_create-middleware-v2.ymlNow let’s take a look a your cluster’s state:

Λ\: $ kubectl --namespace workshop get services,podsNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/database ClusterIP 10.110.225.238 <none> 8080/TCP 66m

service/front ClusterIP 10.97.68.76 <none> 8080/TCP 66m

service/middleware ClusterIP 10.99.161.251 <none> 8080/TCP 66m

NAME READY STATUS RESTARTS AGE

pod/database-v1-69cb46795f-llq5q 2/2 Running 0 66m

pod/front-v1-9cb545d75-5rtcq 2/2 Running 0 66m

pod/middleware-v1-768d6d597d-bbs2b 2/2 Running 0 66m

pod/middleware-v2-5b6b6fdcf7-dq6f4 2/2 Running 0 112sOur app should be answering just as before:

Λ\: $ while true; do curl -qs ${CLUSTER_INGRESS_IP}; echo; done;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:35.107Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:35.901Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:36.392Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:38.05Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:39.152Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:40.255Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:41.452Z"}And the logs from Middleware V2 should only consist in the warm up of the app:

Λ\: $ kubectl --namespace workshop logs -l app=middleware -l version=v2 -c middleware...

{"timestampSeconds":1576948510,"timestampNanos":446000000,"severity":"INFO","thread":"main","logger":"org.springframework.boot.actuate.endpoint.web.EndpointLinksResolver","message":"Exposing 2 endpoint(s) beneath base path \u0027/actuator\u0027","context":"default"}

{"timestampSeconds":1576948510,"timestampNanos":544000000,"severity":"INFO","thread":"main","logger":"org.springframework.boot.web.kubectl logs -n workshop middleware-v2-5b6b6fdcf7-dq6f4 -c middlewareembedded.netty.NettyWebServer","message":"Netty started on port(s): 8181","context":"default"}

{"timestampSeconds":1576948510,"timestampNanos":546000000,"severity":"INFO","thread":"main","logger":"com.stacklabs.workshop.istioongke.middleware.MiddlewareApplicationKt","message":"Started MiddlewareApplicationKt in 11.284 seconds (JVM running for 12.644)","context":"default"}Let’s now configure Istio to mirror all the traffic from Middleware V1 to Middleware v2 with the following template:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

namespace: workshop

name: middleware

spec:

hosts:

- middleware

http:

- route:

- destination:

host: middleware

subset: version-1

mirror: (1)

host: middleware

subset: version-2

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

namespace: workshop

name: middleware

spec:

host: middleware

subsets:

- name: version-1

labels:

version: v1

- name: version-2 (2)

labels:

version: v2| 1 | The mirror syntax to send all routed traffic to a specific subset |

| 2 | The definition of the specific subset. Here we look the label version |

To apply it, you may run:

Λ\: $ kubectl apply --filename 04_production-path/01_mirroring/02_add-mirroring-between-v1-and-v2.ymlYou can now run the following loop, and observe that no errors are returned.

Λ\: $ while true; do curl -qs ${CLUSTER_INGRESS_IP}; echo; done;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:35.107Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:35.901Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:36.392Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:38.05Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:39.152Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:40.255Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T17:17:41.452Z"}But you should now be seeing logs in Middleware V2:

Λ\: $ kubectl --namespace workshop logs deploy/middleware-v2 --container middleware --follow...

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950554,"timestampNanos":43000000,"severity":"INFO","thread":"reactor-http-epoll-3","logger":"com.stacklabs.workshop.istioongke.middleware.DatabaseService","message":"Call made to http://database:8080","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950554,"timestampNanos":98000000,"severity":"INFO","thread":"parallel-1","logger":"com.stacklabs.workshop.istioongke.middleware.MiddlewareHandler","message":"middleware service in version v1 called and answered with Message(from\u003dmiddleware (v1) \u003d\u003e database (v1), date\u003d2019-12-21T17:49:14.098Z[GMT])","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950553,"timestampNanos":599000000,"severity":"INFO","thread":"reactor-http-epoll-2","logger":"com.stacklabs.workshop.istioongke.middleware.MiddlewareHandler","message":"UI Service in version v1 starting...","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950553,"timestampNanos":655000000,"severity":"INFO","thread":"parallel-1","logger":"com.stacklabs.workshop.istioongke.middleware.DatabaseService","message":"Before call to DatabaseService at url http://database:8080","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950554,"timestampNanos":43000000,"severity":"INFO","thread":"reactor-http-epoll-3","logger":"com.stacklabs.workshop.istioongke.middleware.DatabaseService","message":"Call made to http://database:8080","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"e53b9c5fbc9615a00572cf7b3f4f7776","spanId":"0f9c5f89f6486083","spanExportable":"true","X-B3-ParentSpanId":"90d8a3e3047b83a1","parentId":"90d8a3e3047b83a1","timestampSeconds":1576950554,"timestampNanos":98000000,"severity":"INFO","thread":"parallel-1","logger":"com.stacklabs.workshop.istioongke.middleware.MiddlewareHandler","message":"middleware service in version v1 called and answered with Message(from\u003dmiddleware (v1) \u003d\u003e database (v1), date\u003d2019-12-21T17:49:14.098Z[GMT])","context":"default","logging.googleapis.com/trace":"e53b9c5fbc9615a00572cf7b3f4f7776","logging.googleapis.com/spanId":"0f9c5f89f6486083"}

{"traceId":"93d0f1fc867fe4905a701e4439982e8e","spanId":"90251e49cbae69b2","spanExportable":"true","X-B3-ParentSpanId":"1d38b109d1878b87","parentId":"1d38b109d1878b87","timestampSeconds":1576950554,"timestampNanos":683000000,"severity":"ERROR","thread":"reactor-http-epoll-2","logger":"com.stacklabs.workshop.istioongke.middleware.MiddlewareHandler","message":"Random error ?","context":"default","logging.googleapis.com/trace":"93d0f1fc867fe4905a701e4439982e8e","logging.googleapis.com/spanId":"90251e49cbae69b2"} (1)

...| 1 | This is an error log |

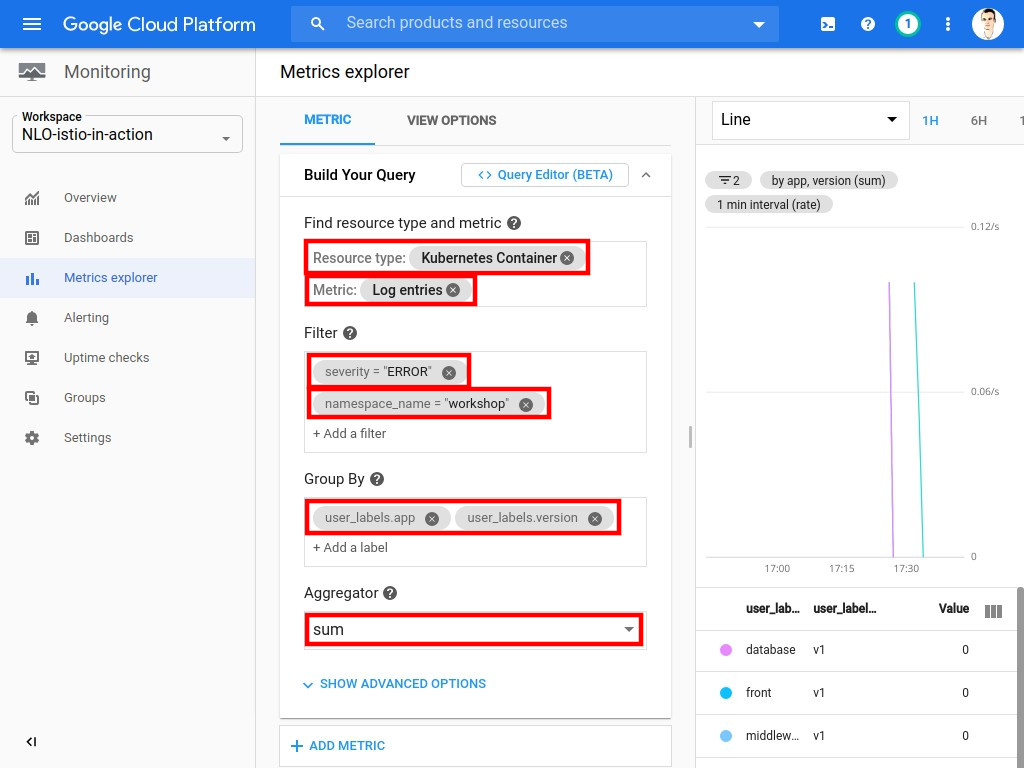

To have a deeper analysis of the system, let’s define the following metric in Monitoring:

| Monitoring interface is a bit buggy when it comes to some specific metrics & resources. To get it working properly, you need to first type and select the metric name, and then the resource type to be able to find everything in the search field. |

-

Metric: Log Entries (i.e

logging.googleapis.com/log_entry_count) -

Resource Type: Kubernetes Container (i.e

k8s_container) -

Filter:

-

severity = "ERROR"

-

namespace_name = "workshop"

-

-

Group By:

-

user_labels.app(type and select app) -

user_labels.version(type and select version)

-

-

Aggregator: sum

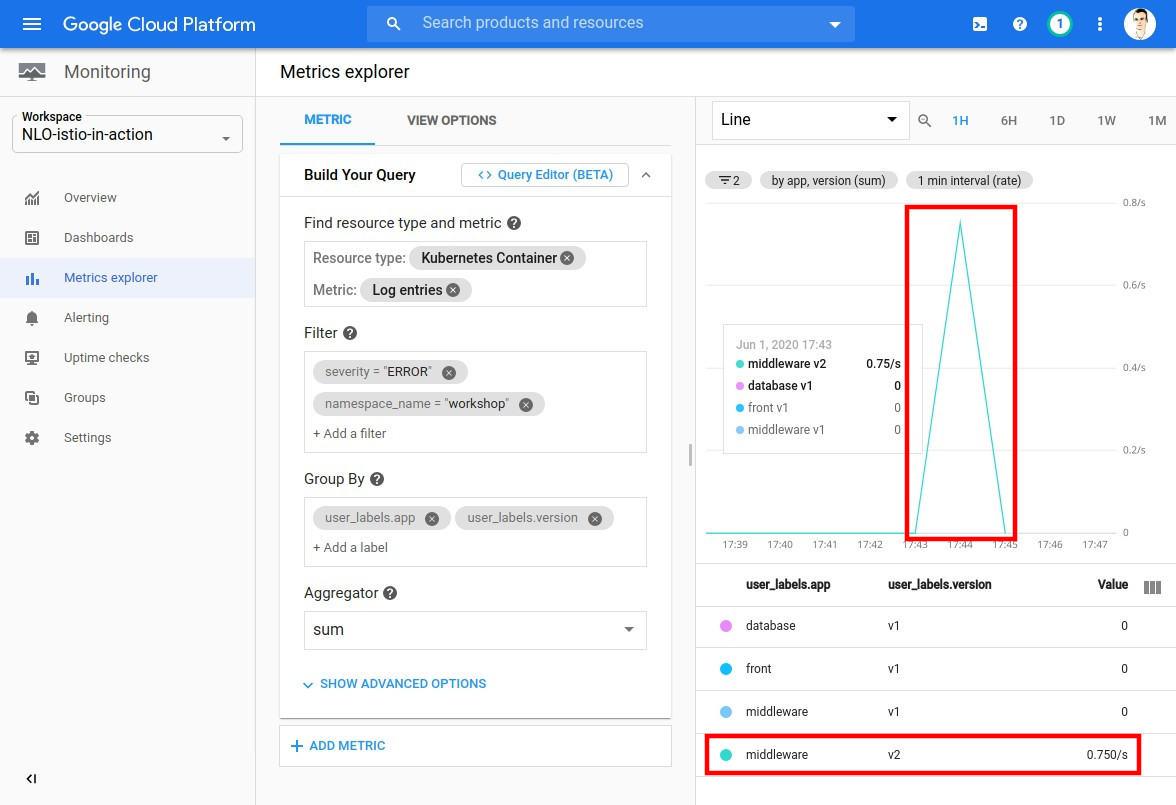

Once the configuration complete, you should be seeing multiple errors coming from the new version:

Now let’s apply the fixed YAML template of the V2 Middleware component:

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: workshop

labels:

app: middleware

version: v2

name: middleware-v2

spec:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

selector:

matchLabels:

app: middleware

version: v2

template:

metadata:

labels:

app: middleware

version: v2

spec:

containers:

- image: stacklabs/istio-on-gke-middleware

imagePullPolicy: IfNotPresent

env:

- name: MIDDLEWARE_VERSION

value: "v2" (1)

- name: MIDDLEWARE_DATABASE_URI

value: http://database:8080

- name: SPRING_CLOUD_GCP_LOGGING_PROJECT_ID

value: "<YOUR_GCP_PROJECT_ID>" (2)

livenessProbe:

httpGet:

path: /actuator/health

port: 8181

initialDelaySeconds: 20

name: middleware

resources:

requests:

memory: "512Mi"

cpu: 1

limits:

memory: "512Mi"

cpu: 1

ports:

- containerPort: 8080

name: http

protocol: TCP| 1 | the fixed version 👍 |

| 2 | Your Google Cloud Project ID |

To apply the template, simply run:

Λ\: $ kubectl apply --filename 04_production-path/01_mirroring/03_create-fixed-middleware-v2.ymlIf your run the siege command again, you should see errors decreasing in Monitoring as well as your components logs.

|

When using mirroring in production, it is important to make sure that any stateful service will know how to

respond to such traffic. In our example app, the +--+

+------------+ +-----------------+ +------------+

# | +---->+ +------> |

~+~ +---+ Front | | Middleware v1 | | Database |

/ \ | +<----+ <------+ |

+-------+----+ +-----------------+ +---^--+-----+

+--+ | |

| +-----------------+ |

| | | |

+--------->+ Middleware v2 |-----------

Fire and forget | | but this request still happens

+-----------------+

In the real world, you would need to make sure that any state sensitive component is either mocked, replaced or at least backed-up for the duration of your test. |

Canary release

Description

The main goal of a canary release is to be able to deploy 2 versions of a service and let the users decide if they want to access the "canary" release.

+---+

# +-----------------+

~+~ +======================+ | |============+

/ \ | | Middleware v1 | |

User1 +------v-----+ | | +-----v------+

| |===>-----------------+ | |

| Front | | Database |

| +-->+-----------------+ | |

User2 +------^-----+ | | +-----^------+

# | | Middleware v2 | |

~+~ +----------------------+ | +------------+

/ \ middleware: upgrade +-----------------+

+---+

Dependenging of the header provided by the user, the flow inside the system is different ( == for User1 and -- for User2)

Execution

| Before proceeding make sure you have deployed Front V1 and Middleware V1 & V2. |

Λ\: $ kubectl --namespace workshop get services,podsNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/database ClusterIP 10.110.225.238 <none> 8080/TCP 120m

service/front ClusterIP 10.97.68.76 <none> 8080/TCP 120m

service/middleware ClusterIP 10.99.161.251 <none> 8080/TCP 120m

NAME READY STATUS RESTARTS AGE

pod/database-v1-69cb46795f-llq5q 2/2 Running 0 120m

pod/front-v1-9cb545d75-5rtcq 2/2 Running 0 120m

pod/middleware-v1-768d6d597d-bbs2b 2/2 Running 0 120m

pod/middleware-v2-5b6b6fdcf7-dq6f4 2/2 Running 0 55mThe goal here is to use the HTTPMatchRequest (documentation)

to control the traffic depending of the value of the http-header x-istio-formation-middleware.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

namespace: workshop

name: middleware

spec:

hosts:

- middleware

http:

- match:

- headers:

x-istio-formation-middleware:

exact: upgrade (1)

route:

- destination:

host: middleware

subset: version-2 (2)

- route:

- destination:

host: middleware

subset: version-1 (3)

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

namespace: workshop

name: middleware

spec:

host: middleware

subsets:

- name: version-1

labels:

version: v1

- name: version-2

labels:

version: v2| 1 | The value to put in the header to trigger the redirection |

| 2 | The new destination if the header matches |

| 3 | The default route used otherwise |

To enable this feature, run the following command:

Λ\: $ kubectl apply --filename 04_production-path/02_canary-release/01_add-http-header-routing.ymlNow let’s try it out with some curl commands on the ingress gateway:

Λ\: $ curl ${CLUSTER_INGRESS_IP}; echo;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T18:21:36.334Z"}Λ\: $ curl ${CLUSTER_INGRESS_IP} --header "x-istio-formation-middleware: upgrade"; echo;{"from":"front (v1) => middleware (v2) => database (v1)","date":"2019-12-21T18:53:20.881Z"}Λ\: $ curl ${CLUSTER_INGRESS_IP} -H "x-istio-formation-middleware: nope"; echo;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T18:54:31.227Z"}

This is possible only because the Front application forwards incoming headers named

x-istio-formation-middleware to its children micro-services. This is possible thanks to Spring Cloud Sleuth in this

context, or can be done manually in our app.

|

To go a bit further, you can try to:

-

Create a dashboard in Monitoring to follow the traffic coming into the new version of the application

-

Follow the logs of both applications in parallel

-

Follow the distributed traces of each application in the Trace page

Traffic Splitting

Description

Traffic splitting will allow you to mitigate the traffic between multiple versions of the middleware component.

You will be able to adjust the flow distribution thanks to a weight attribute.

+-----------------+

90% | +------------+

+-------->+ Middleware v1 | |

+---+ +------------+ | | | +-----v------+

# | | | +-----------------+ | |

~+~ +--->+ Front +--+ | Database |

/ \ | | | +-----------------+ | |

+---+ +------------+ | | | +-----^------+

+-------->+ Middleware v2 | |

10% | +------------+

+-----------------+

In this case, calls from user will go through Middleware v1 90% of the time and only 10% on the Middleware v2.

Execution

| Make sure you have deployed Front V1 and Middleware V1 & V2. |

The idea of this section is to demonstrate how Istio can randomly redirect incoming requests between different versions of a component.

The following YAML defines a weight property that configures how the proxy distributes traffic between different services.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

namespace: workshop

name: middleware

spec:

hosts:

- middleware

http:

- route:

- destination:

host: middleware

subset: version-2

weight: 10 (1)

- destination:

host: middleware

subset: version-1

weight: 90 (2)

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

namespace: workshop

name: middleware

spec:

host: middleware

subsets:

- name: version-1

labels:

version: v1

- name: version-2

labels:

version: v2| 1 | The percentage of request to send to version-2 subset |

| 2 | The percentage to send to version-1 subset |

It can be applied with the following command:

Λ\: $ kubectl apply --filename 04_production-path/03_traffic-splitting/01_add-traffic-weight-splitting.ymlNow let’s run a series of requests and take a look at the responses:

Λ\: $ while true; do curl -qs ${CLUSTER_INGRESS_IP}; echo; done;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:51.254Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:51.518Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:52.084Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:52.926Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:53.801Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:54.294Z"}

{"from":"front (v1) => middleware (v2) => database (v1)","date":"2019-12-21T19:36:54.807Z"} (1)

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:55.638Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:56.054Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:56.6Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:57.147Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:57.413Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:57.48Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:58.073Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:58.663Z"}

{"from":"front (v1) => middleware (v2) => database (v1)","date":"2019-12-21T19:36:59.329Z"} (2)

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:59.844Z"}

{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-21T19:36:59.909Z"}| 1 | First split |

| 2 | Second split |

This feature is particularly useful when you want to progressively start sending users to your a new version of your platform. You create several template and apply them one by one until you have completely migrated your application.

To go a bit further, you can try to:

-

Perform a complete update from V1 to V2 of the Middleware component while your application is under Siege. Did you notice any unavailability or downtime?