Application bootstrap

Anatomy

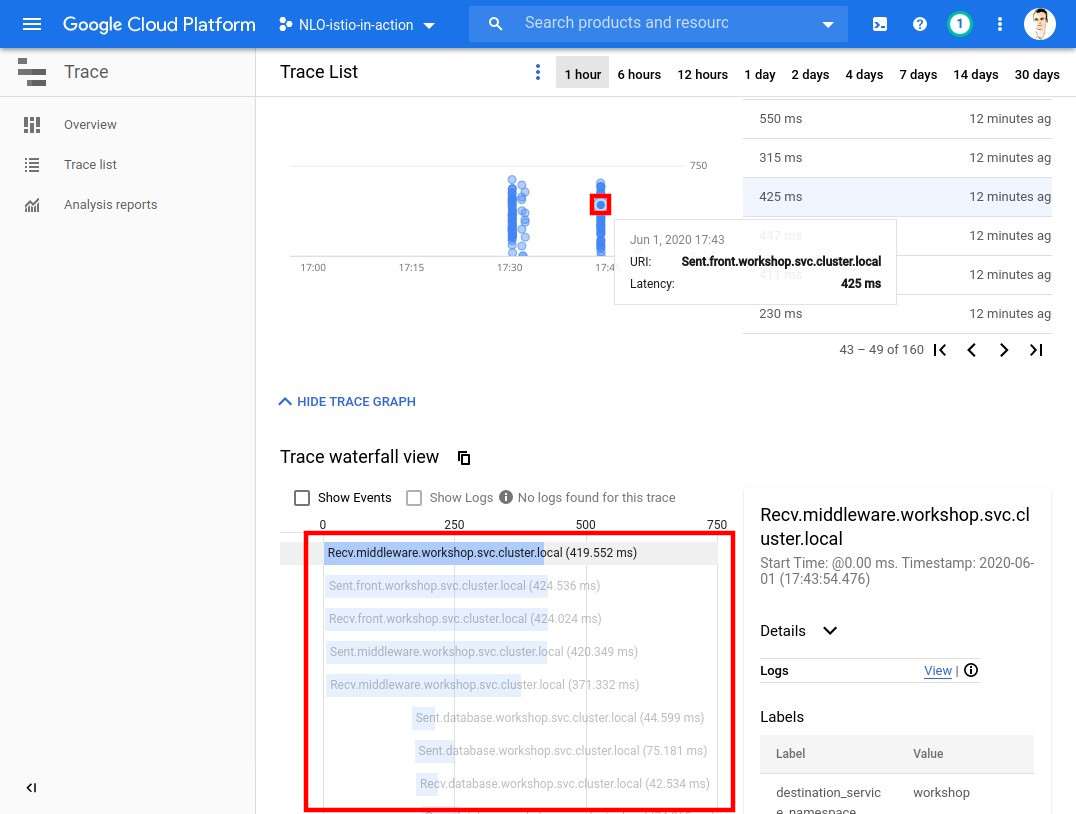

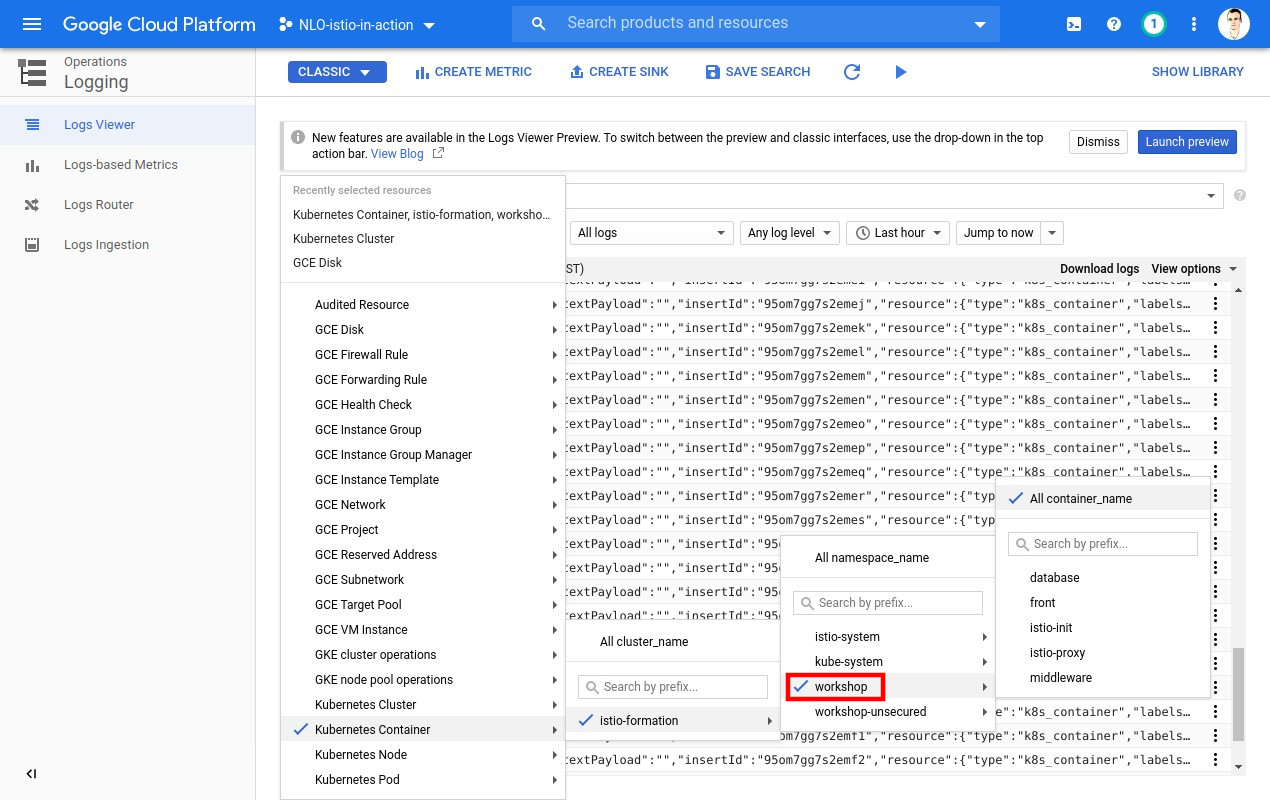

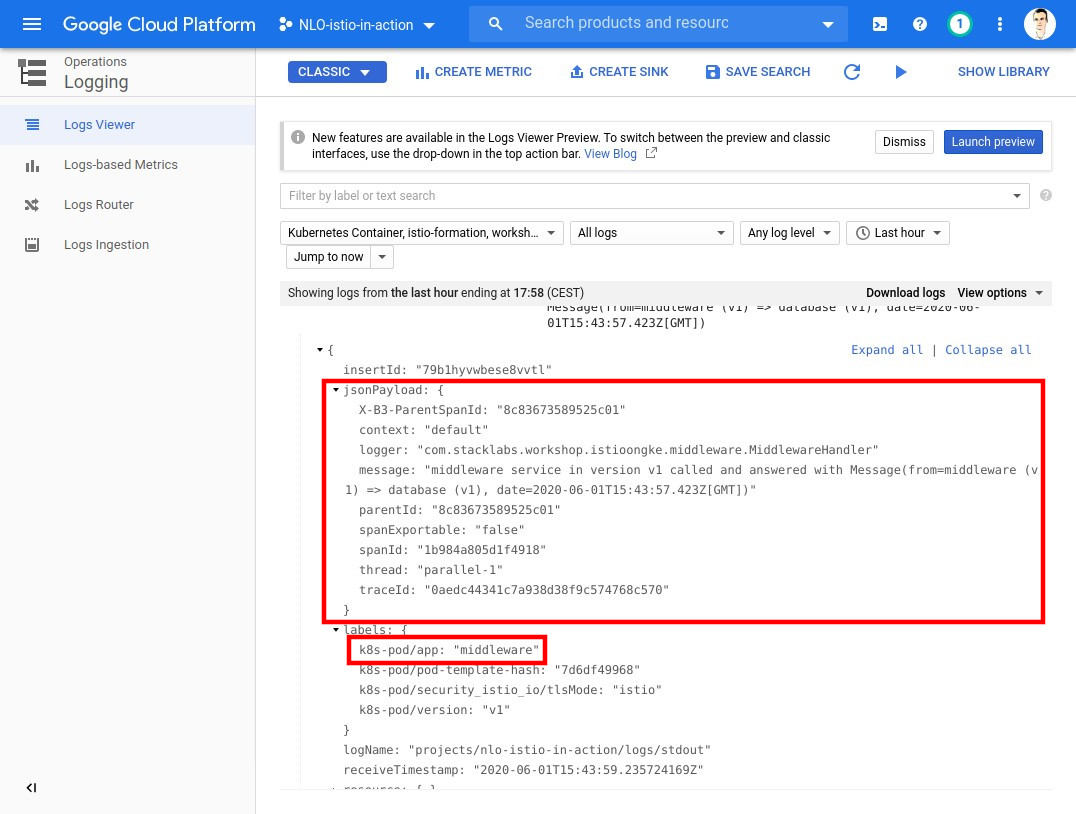

To start playing with Istio, you will deploy a fully-featured application and monitor its behaviour through the cloud console.

You will create a namespace called workshop with three components:

-

Front, a Kotlin server to accept entering requests

-

Middleware, a Kotlin transfer service.

-

Database, a Kotlin database mock.

+-----------+ +--------------+ +------------+

# | |---->| |---->| |

~|~ ---->+ Front | | Middleware | | Database |

/ \ | |<----| |<----| |

+-----------+ +--------------+ +------------+

Kubernetes Manifests description

Let’s take a look at what we are about to deploy piece by piece.

Namespace

apiVersion: v1

kind: Namespace (1)

metadata:

labels:

istio-injection: enabled (2)

name: workshop (3)| 1 | Creation of the Namespace |

| 2 | Activation of the side-car container automatic injection |

| 3 | Name of the namespace for the workshop |

Services

apiVersion: v1

kind: Service (1)

metadata:

namespace: workshop

name: front (2)

labels:

app: front

spec:

ports:

- name: http

port: 8080 (3)

selector:

app: front| 1 | Creation of the Service |

| 2 | Name of the Service |

| 3 | Exposed port(s) of the Service |

A service is created for each components (front, middleware and database).

Deployment

apiVersion: apps/v1

kind: Deployment (1)

metadata:

namespace: workshop

labels:

app: front

version: v1

name: front-v1 (2)

spec:

selector:

matchLabels:

app: front

version: v1

template:

metadata:

labels:

app: front

version: v1

spec:

containers:

- image: stacklabs/istio-on-gke-front

imagePullPolicy: Always

env:

- name: FRONT_MIDDLEWARE_URI

value: http://middleware:8080 (3)

- name: SPRING_CLOUD_GCP_LOGGING_PROJECT_ID

value: "<YOUR_GCP_PROJECT_ID>" (4)

livenessProbe:

httpGet:

path: /actuator/health

port: 8181

initialDelaySeconds: 20

name: front

resources:

requests:

memory: "512Mi"

cpu: 1

limits:

memory: "512Mi"

cpu: 1

ports:

- containerPort: 8080

name: http

protocol: TCP| 1 | Creation of the Deployment |

| 2 | Name of the Deployment |

| 3 | Environment variables configuring front to communicate to middleware, matching the following pattern http://<name_of_service>:<port_of_service>/ |

| 4 | Your project ID allowing trace and log propagation in the cloud console. This value will be updated with YOUR own project ID. |

A Deployment is created for each components (front, middleware and database).

Ingress Gateway

apiVersion: networking.istio.io/v1alpha3

kind: Gateway (1)

metadata:

namespace: workshop

name: front (2)

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80 (3)

name: http

protocol: HTTP

hosts:

- "*" (4)| 1 | Creation of the Ingress Gateway |

| 2 | Name of the gateway which will be used in others manifests |

| 3 | Port number to open on the cluster |

| 4 | Allow all ("*") hosts to be mapped to this ingress gateway |

We are exposing only front component, so there is only one Gateway

Virtual Service

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService (1)

metadata:

namespace: workshop

name: front

spec:

hosts:

- "*" (2)

gateways:

- front (3)

http:

- route:

- destination: (4)

host: front (5)

subset: version-1| 1 | Creation of the VirtualService |

| 2 | Host associated to this virtual service ("*" all in this case) |

| 3 | Name of the Gateway associated to this virtual service (<2> in Ingress Gateway) |

| 4 | Traffic destination of the virtual service |

| 5 | Host used for the destination |

A VirtualService is created for each components (front, middleware and database) but only the front has the

spec.hosts defined to "*" (as in everyone) and spec.gateways defined.

Destination Rule

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule (1)

metadata:

namespace: workshop

name: front

spec:

host: front (2)

subsets:

- name: version-1 (3)

labels:

version: v1 (4)| 1 | Creation of the DestinationRule |

| 2 | Host represented by this Destination Rule. Used in <5> of VirtualService |

| 3 | Name of a subset |

| 4 | Pod’s label to route traffic to |

A DestinationRule is created for each components (front, middleware, database).

Workshop resources 🚩

| The following resources will be referred to during the workshop so do not miss this step ! |

You can download the resources and set them up with the following command:

Λ\: $ wget -qO- https://istio-in-action.training.stack-labs.com/istio-on-gke/1.3/02_workshop/_attachments/resources.tar.gz \

| tar -xv \

| xargs sed -i "s/<YOUR_GCP_PROJECT_ID>/${PROJECT_ID:-$DEVSHELL_PROJECT_ID}/g" (1)| 1 | If running outside of Google’s Cloud Shell, replace DEVSHELL_PROJECT_ID by your Google Cloud project identifier |

Deploy

Let’s now deploy the application:

Λ\: $ kubectl apply --filename 03_application-bootstrap/application-base.ymlnamespace/workshop created

service/database created

deployment.apps/database-v1 created

virtualservice.networking.istio.io/database created

destinationrule.networking.istio.io/database created

service/middleware created

deployment.apps/middleware-v1 created

virtualservice.networking.istio.io/middleware created

destinationrule.networking.istio.io/middleware created

service/front created

deployment.apps/front-v1 created

gateway.networking.istio.io/front created

virtualservice.networking.istio.io/front created

destinationrule.networking.istio.io/front created| This file can be used to "reset" your environment at any moment or before each step, so keep it clean just in case. |

Let’s take a look at what was just deployed:

Λ\: $ kubectl get services,deployments,pods,virtualservices,destinationrules,gateway --namespace workshop --output wideNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/database ClusterIP 10.121.9.53 <none> 8080/TCP 96s app=database

service/front ClusterIP 10.121.1.11 <none> 8080/TCP 95s app=front

service/middleware ClusterIP 10.121.14.56 <none> 8080/TCP 95s app=middleware

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.extensions/database-v1 1/1 1 1 96s database stacklabs/istio-on-gke-database app=database,version=v1

deployment.extensions/front-v1 1/1 1 1 95s front stacklabs/istio-on-gke-front app=front,version=v1

deployment.extensions/middleware-v1 1/1 1 1 95s middleware stacklabs/istio-on-gke-middleware app=middleware,version=v1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/database-v1-88db48f9b-t2stq 2/2 Running 0 96s 10.56.1.6 gke-istio-formation-default-pool-7809b252-jkzf <none> <none>

pod/front-v1-8b76df86-4m9t8 2/2 Running 0 95s 10.56.2.6 gke-istio-formation-default-pool-7809b252-1kl1 <none> <none>

pod/middleware-v1-84cbf5cb5d-hxx4x 2/2 Running 0 95s 10.56.0.5 gke-istio-formation-default-pool-7809b252-5svv <none> <none>

NAME GATEWAYS HOSTS AGE

virtualservice.networking.istio.io/database [database] 1m

virtualservice.networking.istio.io/front [front] [*] 1m

virtualservice.networking.istio.io/middleware [middleware] 1m

NAME HOST AGE

destinationrule.networking.istio.io/database database 1m

destinationrule.networking.istio.io/front front 1m

destinationrule.networking.istio.io/middleware middleware 1m

NAME AGE

gateway.networking.istio.io/front 1mCongratulations 🎉 ! You have successfully deployed your first application in an Istio driven K8S cluster.

Cluster IP 🚩

| The following environment variable will be referred to during the workshop so do not miss this step ! |

# For your current terminal session

Λ\: $ export CLUSTER_INGRESS_IP=$(kubectl --namespace istio-system get service/istio-ingressgateway -o json | jq '.status.loadBalancer.ingress[0].ip' -r)

# For any other terminal session you may start

Λ\: $ echo "export CLUSTER_INGRESS_IP=${CLUSTER_INGRESS_IP}" >> ${HOME}/.bashrcGenerate traffic

In order to see some traffic in the cluster, let’s issue some HTTP requests with curl.

Λ\: $ curl ${CLUSTER_INGRESS_IP}; echo;{"from":"front (v1) => middleware (v1) => database (v1)","date":"2019-12-13T15:28:48.393Z"Now let’s generate a lot of traffic.

We will be using the tool named Siege packaged inside the docker image yokogawa/siege:

Λ\: $ docker run --rm -it yokogawa/siege ${CLUSTER_INGRESS_IP} -t 30SNew configuration template added to /root/.siege

Run siege -C to view the current settings in that file

** SIEGE 3.0.5

** Preparing 25 concurrent users for battle.

The server is now under siege...| During this operation, numerous requests are sent to your cluster. Don’t forget to stop it when you feel it’s enough traffic by using Ctrl+c. |

You will then be presented with Siege’s run report.

Transactions: 57 hits

Availability: 100.00 %

Elapsed time: 3.36 secs

Data transferred: 0.00 MB

Response time: 1.18 secs

Transaction rate: 16.96 trans/sec

Throughput: 0.00 MB/sec

Concurrency: 20.10

Successful transactions: 57

Failed transactions: 0

Longest transaction: 2.29